One2Scene: Geometric Consistent Explorable 3D Scene Generation from a Single Image

Abstract

Generating explorable 3D scenes from a single image is a highly challenging problem in 3D vision. Existing methods struggle to support free exploration, often producing severe geometric distortions and noisy artifacts when the viewpoint moves far from the original perspective. We introduce One2Scene, an effective framework that decomposes this ill-posed problem into three tractable subtasks to enable immersive explorable scene generation. We first use a panorama generator to produce anchor views from a single input image as initialization. Then, we lift these 2D anchors into an explicit 3D geometric scaffold via a generalizable, feed-forward Gaussian Splatting network. Rather than directly reconstructing from the panorama, we reformulate the task as multi-view stereo matching across sparse anchors, which allows us to leverage robust geometric priors learned from large-scale multi-view data. A bidirectional feature fusion module is used to enforce cross-view consistency, yielding an efficient and geometrically reliable scaffold. Finally, the scaffold serves as a strong prior for a novel view generator that can produce photorealistic and geometrically accurate views at arbitrary cameras. By explicitly constructing and conditioning on a 3D-consistent scaffold, One2Scene works stably under large camera motions, facilitating immersive scene exploration. Extensive experiments show that One2Scene substantially outperforms state-of-the-art methods in panorama depth estimation, feed-forward 360° reconstruction, and explorable 3D scene generation.

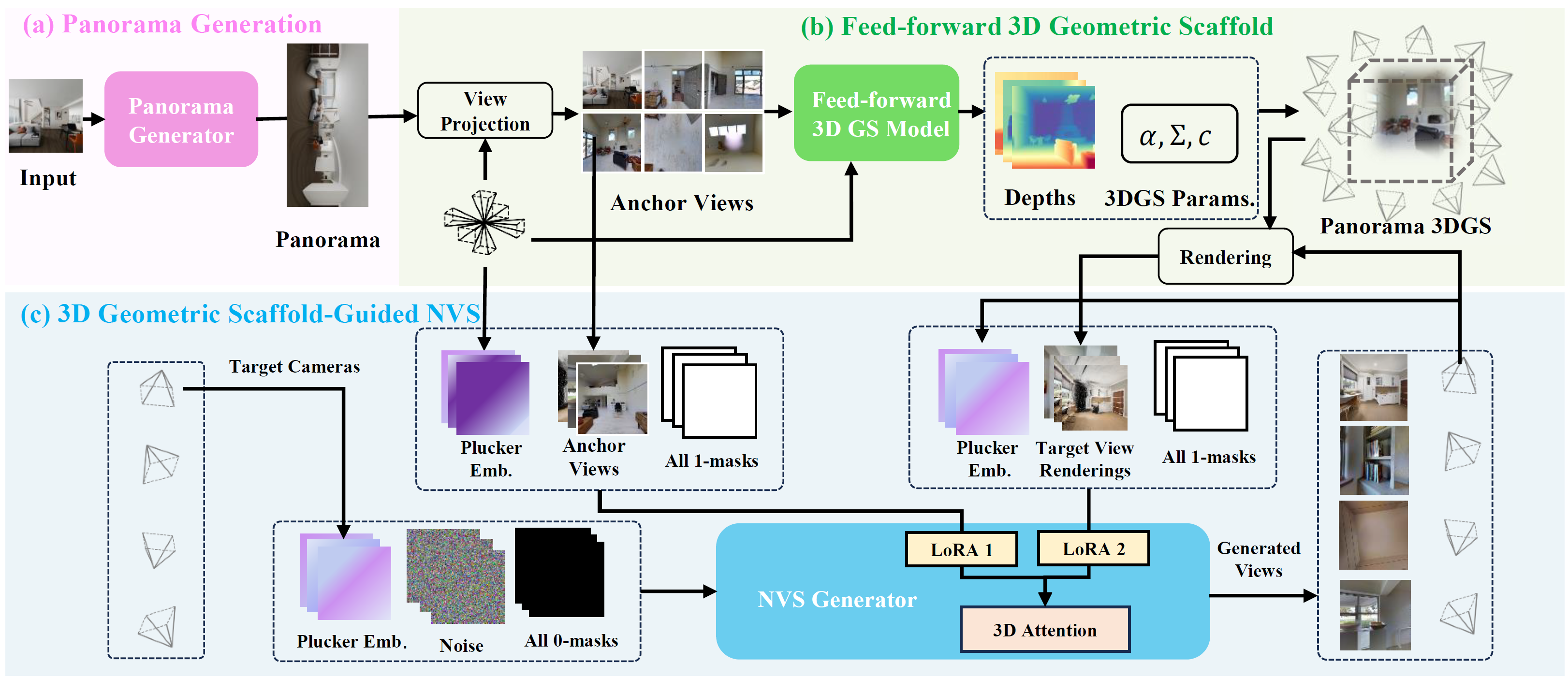

One2Scene Overview

Our method consists of three stages: (a) an anchor view generation stage to establish an initial 360-degree representation, (b) a feed-forward 3D Gaussian Splatting stage to construct an explicit 3D geometric scaffold, and (c) a synthesis stage that leverages the scaffold information to produce high-quality novel views. The pipeline enables geometrically consistent and photorealistic novel view synthesis from a single input image.

Result Gallery

Single image to explorable 3D scene generation results across different environments and styles.

Public Space

Photorealistic Style

Creative Workspace

Photorealistic Style

Modern Living Space

Photorealistic Style

Modern Workspace

Photorealistic Style

Indoor Dining Room

Photorealistic Style

Office Environment

Photorealistic Style

Suburban Outdoor

Photorealistic Style

Indoor Passageways

Photorealistic Style

Dining Space

Minecraft Style

Living Room

Anime Style

Cozy Living Space

Anime Style

Study Room

Anime Style

Outdoor Scene

Minecraft Style

Aquatic Landscape

Photorealistic Style

Suburban Street

Photorealistic Style

Aquatic Landscape

Anime Style

Comparison to other methods

Compare the generated videos of our method One2Scene with other baseline methods across different scenarios. Our method demonstrates superior geometric consistency and visual quality in explorable 3D scene generation. Try selecting different scenes to see the comparison!

Point Cloud Reconstruction Comparison

Compare the 3D point cloud reconstructions of our method One2Scene with other baseline methods. Our method produces more geometrically accurate and complete point clouds with better color fidelity. Rotate and zoom the 3D models to explore the reconstruction quality from different angles!

Point cloud files are large, please wait patiently for loading.

Additional Outdoor Generation Results

More examples of explorable 3D scene generation for outdoor environments. Our method demonstrates consistent geometric accuracy and visual quality for different type of outdoor scenes. Each video showcases smooth camera navigation through the reconstructed 3D environment.

Aquatic Landscape

Photorealistic Style

City

Photorealistic Style

Verdant Landscape

Photorealistic Style

Aquatic Landscape

Minecraft Game Style

City

Anime Style

Terrestrial Landscape

Anime Style

Verdant Landscape

Minecraft Game Style

City

Minecraft Game Style